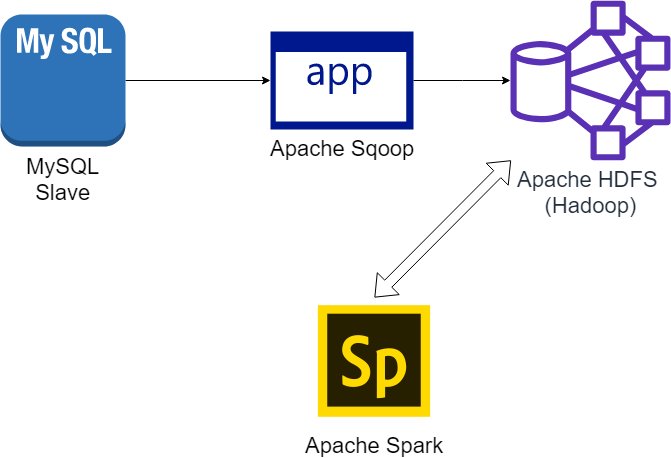

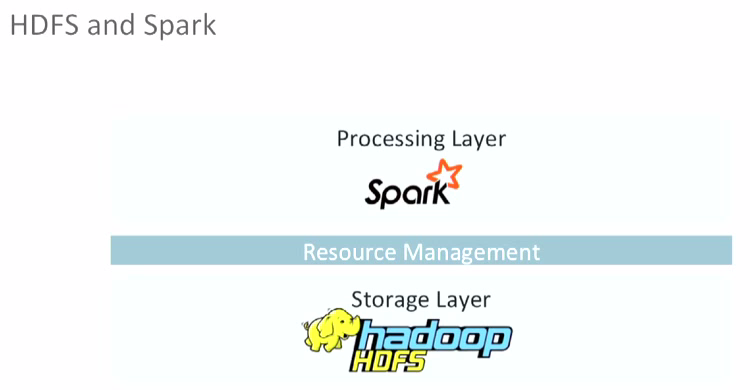

Supports more than just Map and Reduce functions.With this in-memory data storage, Spark comes with performance advantage. You have to look at your data and use cases to assess the memory requirements. It can store part of a data set in memory and the remaining data on the disk. Spark will attempt to store as much as data in memory and then will spill to disk. Spark can be used for processing datasets that larger than the aggregate memory in a cluster. Spark operators perform external operations when data does not fit in memory. It’s designed to be an execution engine that works both in-memory and on-disk. Spark holds intermediate results in memory rather than writing them to disk which is very useful especially when you need to work on the same dataset multiple times. It provides a higher level API to improve developer productivity and a consistent architect model for big data solutions. Spark also supports lazy evaluation of big data queries, which helps with optimization of the steps in data processing workflows. With capabilities like in-memory data storage and near real-time processing, the performance can be several times faster than other big data technologies. Spark takes MapReduce to the next level with less expensive shuffles in the data processing. It’s not intended to replace Hadoop but to provide a comprehensive and unified solution to manage different big data use cases and requirements. We should look at Spark as an alternative to Hadoop MapReduce rather than a replacement to Hadoop. It provides support for deploying Spark applications in an existing Hadoop v1 cluster (with SIMR – Spark-Inside-MapReduce) or Hadoop v2 YARN cluster or even Apache Mesos. Spark runs on top of existing Hadoop Distributed File System ( HDFS) infrastructure to provide enhanced and additional functionality. It also supports in-memory data sharing across DAGs, so that different jobs can work with the same data. Spark allows programmers to develop complex, multi-step data pipelines using directed acyclic graph ( DAG) pattern. Each of those jobs was high-latency, and none could start until the previous job had finished completely.

Install apache spark require how much space series#

If you wanted to do something complicated, you would have to string together a series of MapReduce jobs and execute them in sequence.

It also requires the integration of several tools for different big data use cases (like Mahout for Machine Learning and Storm for streaming data processing). Also, Hadoop solutions typically include clusters that are hard to set up and manage. Hence, this approach tends to be slow due to replication & disk storage. The Job output data between each step has to be stored in the distributed file system before the next step can begin. Each step in the data processing workflow has one Map phase and one Reduce phase and you'll need to convert any use case into MapReduce pattern to leverage this solution. MapReduce is a great solution for one-pass computations, but not very efficient for use cases that require multi-pass computations and algorithms. Hadoop as a big data processing technology has been around for 10 years and has proven to be the solution of choice for processing large data sets. In this first installment of Apache Spark article series, we'll look at what Spark is, how it compares with a typical MapReduce solution and how it provides a complete suite of tools for big data processing. Developers can use these capabilities stand-alone or combine them to run in a single data pipeline use case. In addition to Map and Reduce operations, it supports SQL queries, streaming data, machine learning and graph data processing.

0 kommentar(er)

0 kommentar(er)